For most of the past decade, digital transformation followed a simple logic: adopt more software, move to the cloud, automate workflows, and scale horizontally. Constraints were assumed to be temporary, solvable, or external. Compute would get cheaper. Networks would get faster. Security would be layered on later.

That logic no longer holds.

Digital transformation in 2026–2027 is no longer defined by possibility but by limits. Limits in energy. Limits in infrastructure. Limits in governance. Limits in organizational capacity to absorb change. What separates leaders from laggards now is not who adopts the most technology, but who understands which transformations are structurally viable under real-world constraints.

AI, cloud platforms, data infrastructure, cybersecurity, public-sector IT, and emerging technologies are no longer independent vectors of progress. They now form a tightly coupled system where movement in one domain creates pressure in others. Acceleration in AI creates stress on energy grids. Automation increases attack surfaces. Open innovation collides with regulatory mandates. Centralization improves efficiency while amplifying systemic risk.

Superway is tracking 15 digital transformation trends not because they are individually novel, but because together they reveal how the system is reorganizing itself.

What Changed in the Last 12–18 Months

Three developments fundamentally altered the trajectory of digital transformation:

AI Broke the Cloud Abstraction

For years, the cloud promised near-infinite scalability. AI shattered that abstraction.

Large-scale AI workloads exposed the physical reality behind “elastic compute.” Training and inference are not just software problems; they are energy, cooling, supply chain, and geopolitical problems. Data centers are no longer invisible infrastructure — they are contested assets that require permits, power contracts, and public approval.

As a result:

- Compute is becoming scarce by region, not globally.

- Infrastructure advantages are compounding faster than software advantages.

- Access to AI capability is becoming uneven across organizations and geographies.

Digital transformation is now constrained by where electrons can flow, not just where code can run.

Security and Governance Moved From Risk Management to Design Constraint

Cybersecurity, privacy, and compliance used to slow transformation at the edges. Now they shape architectures from the start.

Zero Trust mandates, AI governance frameworks, data residency laws, and sector-specific regulations are forcing organizations to redesign systems before deployment. Automation without security is no longer acceptable; neither is AI without auditability.

This is especially visible in:

- Federal IT modernization

- Regulated industries (finance, healthcare, energy)

- Cross-border data systems

Transformation speed is no longer determined by engineering velocity alone, but by governance throughput.

Platform Consolidation Accelerated While Openness Rebounded

Two opposing forces intensified simultaneously:

- Platform consolidation: Enterprises are reducing tool sprawl, concentrating workloads into fewer, deeper platforms (cloud providers, data warehouses, security stacks).

- Open-source resurgence: At the same time, open-source AI and interoperable protocols are gaining momentum as strategic hedges against lock-in.

This creates a paradoxical environment where organizations want fewer vendors, but more control.

Digital transformation strategies now oscillate between centralization for efficiency and openness for resilience.

System-Level Map of Digital Transformation

Before examining each trend individually, it is necessary to understand the system they operate within.

Digital transformation is no longer a linear pipeline (digitize → automate → optimize). It is a feedback system with reinforcing loops and choke points.

Below is the core dynamic shaping the next phase:

The AI–Infrastructure–Governance Loop

- → AI capabilities expand

- → Demand for compute, data, and real-time decision-making rises

- → Infrastructure expands (cloud, data centers, edge)

- → Energy demand and security risk increase

- → Regulatory and governance pressure intensifies

- → Deployment slows, centralizes, or fragments

- → Organizations seek efficiency, automation, and consolidation

- → AI is used to manage the complexity it created

This loop explains why:

- Cybersecurity automation scales alongside AI adoption

- Edge computing emerges as a response to centralized bottlenecks

- Open-source AI gains appeal as governance and cost pressures rise

- Federal IT modernization becomes a proving ground for constrained transformation

Where Bottlenecks Form

Superway sees bottlenecks forming in four places:

- Power and location – not all regions can support AI growth

- Security operations – human-driven security does not scale

- Data governance – fragmented data limits AI value

- Talent adaptation – cloud-native and AI-native skills remain scarce

Trends that relieve these bottlenecks accelerate. Trends that exacerbate them stall or consolidate.

Why This System Produces Winners and Losers

Digital transformation now rewards:

- Scale

- Integration depth

- Governance maturity

- Infrastructure foresight

It penalizes:

- Tool sprawl

- Short-term optimization

- Assumptions of infinite capacity

- Siloed decision-making

The 15 Digital Transformation Forces Reshaping the System

The following trends represent the structural foundation of the current digital transformation cycle. Together, they form a new blueprint for systemic resilience.

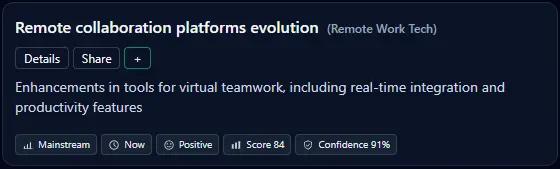

1. Remote Collaboration Platforms Evolution

From Connectivity Tools to Cognitive Infrastructure

What Is Actually Changing

Remote collaboration platforms are transitioning from communication utilities into cognitive infrastructure — systems designed to manage attention, context, and coordination at scale.

The first phase of remote work prioritized availability: video, chat, file sharing. The current phase prioritizes work coherence. Enterprises now expect collaboration platforms to:

- Preserve institutional memory

- Reduce meeting load through async coordination

- Integrate directly into execution systems (task management, dev pipelines, CRM)

- Embed AI to summarize, prioritize, and route information

This shift reflects a deeper realization: distributed work increases information entropy unless platforms actively counteract it.

Adoption Reality

- Mainstream in knowledge work organizations

- Uneven depth: most firms still use <40% of platform capability

- Heavy experimentation with AI copilots, limited operational trust so far

The constraint is no longer access — it is behavioral change and integration discipline.

Market Mechanics

Vendors are competing on:

- Depth of workflow integration

- AI-assisted coordination (summaries, action extraction)

- Platform consolidation (chat + docs + tasks + video)

Smaller point solutions are being absorbed or sidelined as enterprises reduce tool sprawl.

Second-Order Effects

- Collaboration data becomes a strategic asset for analytics and AI

- Security surface area expands, feeding cybersecurity automation needs

- Remote collaboration maturity enables global talent utilization — increasing competitive pressure

Winners and Losers

Winners

- Integrated platforms with extensible APIs

- Vendors embedding AI without fragmenting UX

Losers

- Standalone tools without ecosystem leverage

- Organizations treating collaboration as culture-only, not system design

Failure Modes

- AI overload increasing noise instead of reducing it

- Poor governance leading to knowledge silos inside platforms

12–24 Month Outlook

Expect fewer platforms, deeper usage, and rising demand for measurable productivity outcomes rather than engagement metrics.

What Superway Tracks

- Tool consolidation events

- AI feature adoption vs disablement

- Collaboration data feeding analytics pipelines

2. IT Asset Disposition Evolution

From End-of-Life Task to Strategic Control Point

What Is Actually Changing

IT asset disposition (ITAD) is being redefined as a governed lifecycle function, not a disposal service.

As hardware refresh cycles accelerate due to AI workloads and cloud-native infrastructure, enterprises face:

- Rising volumes of decommissioned equipment

- Increased regulatory scrutiny over e-waste

- Persistent data security risks beyond system shutdown

SuperSense research highlights ITAD’s evolution through AI-enabled tracking, compliance integration, and circular economy models

Adoption Reality

- Breakout stage

- Mature in highly regulated industries

- Underdeveloped in mid-market enterprises

Most organizations still treat ITAD as downstream cleanup, despite upstream risk implications.

Market Mechanics

Drivers include:

- ESG reporting requirements

- Secure data destruction mandates

- Asset resale and recovery value optimization

Vendors increasingly integrate ITAD into asset lifecycle management platforms rather than standalone services.

Second-Order Effects

- Procurement decisions increasingly factor end-of-life compliance

- Data security policies extend beyond active infrastructure

- Sustainability metrics become auditable, not aspirational

Winners and Losers

Winners

- Enterprises embedding ITAD into procurement and security policy

- Vendors offering auditable, automated disposition workflows

Losers

- Organizations with opaque asset inventories

- Firms exposed to e-waste or data breach liability

Failure Modes

- Manual tracking at scale

- Treating sustainability as reporting rather than operational reality

12–24 Month Outlook

ITAD will become a board-level risk topic as ESG enforcement tightens and hardware turnover accelerates.

What Superway Tracks

- AI-based asset tracking adoption

- Regulatory enforcement trends

- Integration between ITAD and security platforms

3. Open-Source AI Competition

Strategic Optionality in an AI-Dependent World

What Is Actually Changing

Open-source AI models are no longer niche alternatives; they are strategic instruments used to rebalance power between enterprises and hyperscalers.

SuperSense research shows accelerating momentum driven by:

- Competitive benchmarks

- Developer ecosystems

- Enterprise fine-tuning use cases

The key shift is not performance parity — it is control parity.

Adoption Reality

- Breakout stage

- Widely piloted, selectively deployed

- Strong traction in regulated and cost-sensitive environments

Enterprises increasingly adopt hybrid AI stacks, blending proprietary and open models.

Market Mechanics

Open-source AI thrives where:

- Customization matters

- Cost predictability is critical

- Governance and auditability are required

This challenges pricing power and lock-in strategies of closed platforms.

Second-Order Effects

- Increased demand for internal AI ops and governance

- Talent competition shifts toward model optimization skills

- Slower, more deliberate AI deployment in regulated sectors

Winners and Losers

Winners

- Organizations with strong ML engineering capacity

- Open ecosystems enabling rapid iteration

Losers

- Firms assuming AI can remain purely outsourced

- Vendors relying solely on closed differentiation

Failure Modes

- Underestimating operational complexity

- Fragmented governance across models

12–24 Month Outlook

Expect sustained coexistence: closed models dominate convenience; open models dominate control.

What Superway Tracks

- Enterprise hybrid deployments

- Benchmark convergence

- Regulatory language referencing model transparency

4. AI Data Center Energy Constraints

When Digital Ambition Meets Physical Reality

What Is Actually Changing

AI workloads have exposed a fundamental truth: compute is bounded by energy.

SuperSense research documents escalating grid stress as AI data centers consume electricity at city-scale levels.

The result is a re-politicization of infrastructure once considered neutral.

Adoption Reality

- Constraint-driven, not adoption-driven

- Energy availability now shapes AI deployment geography

Organizations with access to power scale faster — others stall regardless of software readiness.

Market Mechanics

- Long-term power contracts becoming strategic assets

- Cooling and efficiency innovations accelerating

- Regional disparities intensifying

Second-Order Effects

- Push toward smaller, optimized models

- Increased interest in edge inference

- Regulatory scrutiny framing AI as public infrastructure

Winners and Losers

Winners

- Hyperscalers with capital and energy access

- Energy-efficient AI vendors

Losers

- Mid-scale AI labs

- Regions with constrained grids

Failure Modes

- Overbuilding without grid coordination

- Ignoring public opposition and permitting risk

12–24 Month Outlook

Energy constraints will shape AI strategy more than model innovation.

What Superway Tracks

- Data center permit delays

- Regional power pricing anomalies

- AI efficiency benchmarks

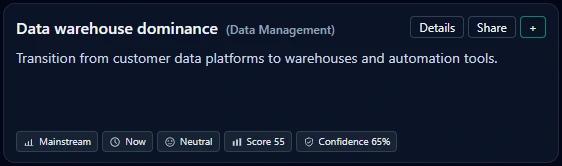

5. Data Warehouse Dominance

The Return of Centralized Decision Gravity

What Is Actually Changing

Despite architectural debates, enterprises are consolidating analytics and AI workloads into centralized, governed data warehouses.

SuperSense research confirms continued dominance driven by AI integration and governance needs

This reflects a shift from experimentation to decision reliability.

Adoption Reality

- Mainstream

- Near-universal in large enterprises

- Increasing penetration into mid-market

Data warehouses now serve as AI input engines, not just reporting tools.

Market Mechanics

- Tight integration with AI and BI

- Pricing pressure driving optimization

- Competition between warehouse and lakehouse paradigms narrowing

Second-Order Effects

- Decline of fragmented analytics stacks

- Centralization of data governance authority

- Faster deployment of predictive analytics

Winners and Losers

Winners

- Platforms offering performance + governance

- Organizations with disciplined data models

Losers

- Tool-heavy analytics environments

- Teams bypassing governance for speed

Failure Modes

- Cost overruns from poor query discipline

- Central bottlenecks slowing innovation

12–24 Month Outlook

Warehouses will remain dominant, but pressure will mount to optimize cost and latency.

What Superway Tracks

- AI-native warehouse features

- Cost-to-query ratios

- Migration away from fragmented data stacks

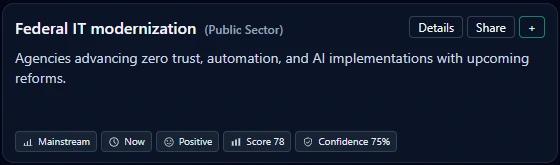

6. Federal IT Modernization

Mandate-Driven Transformation Under Maximum Constraint

What Is Actually Changing

Federal IT modernization is shifting from periodic “systems refresh” to a continuous compliance-and-capability program. The change is not primarily technological — it’s structural: agencies are being pushed to modernize because risk (cyber), policy (cloud + AI), and mission continuity now intersect.

SuperSense highlights ongoing initiatives and mandates driving upgrades—cloud migration, Zero Trust architecture, AI integration, legacy replacement, and cybersecurity compliance.

Adoption Reality

Federal modernization operates in a distinctive adoption environment:

- High urgency (threat landscape + mission exposure)

- High constraint (procurement cycles, compliance, budget rules)

- High asymmetry (some agencies transform rapidly; others remain legacy-bound)

This creates a pattern: modernization advances in waves aligned to budget cycles, directives, and vendor contract availability.

Market Mechanics

Federal modernization is a market with its own physics:

- Compliance gating (e.g., FedRAMP pathways) heavily influences vendor viability

- Contract vehicles & integrators shape what gets adopted (often more than technical merit)

- Security-by-default increases demand for solutions that “prove” posture, not just claim it

The net effect is that winners are rarely the most novel products — they’re the products that fit the procurement + compliance delivery system.

Second-Order Effects

- Federal standards “spill over” into regulated industries, raising baseline expectations

- Modernization increases the attack surface temporarily (transition periods are fragile)

- Zero Trust adoption accelerates cybersecurity automation demand (Trend 14), because manual ops don't scale

Winners and Losers

Winners

- Vendors with credible compliance posture and referenceable deployments

- Platforms that reduce implementation complexity (repeatable patterns, templates, auditable controls)

Losers

- Tools requiring deep customization without clear compliance mapping

- Agencies that modernize infrastructure without modernizing operating models

Failure Modes

- Cloud migration that replicates legacy architecture (“lift-and-shift stagnation”)

- AI adoption without governance, leading to audit pushback and program freezes

- Siloed modernization (security, data, apps modernize separately → brittle integration)

12–24 Month Outlook

Federal IT will remain a leading indicator of constraint-driven enterprise modernization, especially around Zero Trust, identity, automation, and AI governance.

What Superway Tracks

- Directive-driven adoption spikes (CISA, executive-level guidance)

- FedRAMP pipeline velocity and approvals

- Contract awards tied to Zero Trust and automation outcomes

7. AI Data Center Expansion

Compute Buildouts as Strategic Moats

What Is Actually Changing

AI data center expansion is not merely “more capacity.” It’s a shift toward compute as strategic sovereignty — where access to large-scale GPU infrastructure increasingly determines who can compete in AI-enabled industries.

SuperSense notes hyperscalers investing $100B+ into AI data centers amid demand for compute and emerging power bottlenecks.

Adoption Reality

This trend is dominated by a small set of actors:

- Hyperscalers expanding multi-region AI capacity

- Specialized operators building GPU-first facilities

- Enterprises purchasing reserved capacity or building dedicated infrastructure for sensitive workloads

The adoption curve is lopsided: organizations don’t “adopt” expansion — they gain or lose access to it.

Market Mechanics

Key mechanics shaping expansion:

- Capex scale as a barrier to entry

- GPU supply chains as strategic dependencies

- Permitting and power as gating constraints

- Cooling and density innovations as differentiators

Expansion is also increasingly linked to national policy and regional incentives, turning data center siting into a competitive game among states/regions.

Second-Order Effects

- Concentration of AI capability into a small number of infrastructure owners

- Acceleration of platform lock-in (compute + model + data tooling bundled)

- Increased viability of open-source AI as a hedge — but only if infrastructure is accessible

- Tight coupling to energy constraints, expansion creates the energy problem it must then solve

Winners and Losers

Winners

- Hyperscalers and vertically integrated infrastructure players

- Regions with cheap, abundant, and reliable power

- Efficiency tooling vendors (scheduling, utilization optimization, thermal management)

Losers

- Mid-scale AI labs lacking infrastructure leverage

- Regions with constrained grids and slow permitting

- Enterprises assuming AI scale is purely a software decision

Failure Modes

- Overbuilding in power-constrained regions → delays, political backlash

- Underutilization due to poor workload scheduling or model inefficiency

- Concentration risk: outages or supply constraints cascade across industries

12–24 Month Outlook

Expect continued buildout plus a parallel rise in compute allocation strategies: reserving capacity, workload prioritization, and sovereign/regulated AI infrastructure projects.

What Superway Tracks

- Capex announcements turning into permits and active construction

- GPU cluster density trends

- Regional “power-first” siting signals (grid upgrades, PPAs, new substations)

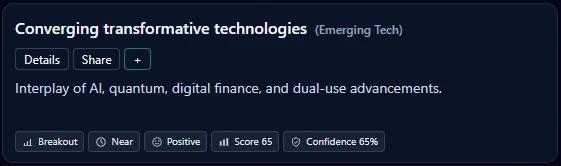

8. Converging Transformative Technologies

Where Breakthroughs Become Nonlinear — and Governance Gets Harder

What Is Actually Changing

The most consequential innovation is increasingly happening at intersections: AI with quantum, AI with biotech, nanotech with edge systems, and digital finance with dual-use capabilities.

SuperSense explicitly frames this as rising interest in convergence amid U.S. digital transformation initiatives, tracking subtopics like AI–quantum fusion and biotech digital twins.

Adoption Reality

Convergence doesn’t “adopt” like SaaS. It progresses through:

- Research partnerships and hybrid labs

- Pilot deployments in high-value domains (finance, defense, pharma, advanced manufacturing)

- Platform-layer emergence (tooling enabling cross-domain workflows)

Most enterprises will encounter convergence indirectly: through vendor roadmaps, regulatory requirements, or new competitive baselines.

Market Mechanics

Convergence is driven by:

- Funding patterns favoring cross-domain teams

- Data availability + compute enabling simulation and digital twin approaches

- Strategic competition (national security + industrial policy)

But convergence also increases complexity costs: teams need mixed expertise, timelines are longer, and regulatory overlap is common.

Second-Order Effects

- Creates new “stack wars” where ecosystems compete, not products

- Raises dual-use risk and accelerates governance needs (especially in public sector)

- Forces enterprises to rethink org design: siloed R&D struggles to keep up

Winners and Losers

Winners

- Organizations capable of interdisciplinary execution (platform + domain expertise)

- Ecosystems that provide shared tooling across domains (simulation, data governance, compute orchestration)

Losers

- Firms that treat emerging tech as isolated innovation labs with no operational pathway

- Regulatory-naïve deployments that trigger program shutdowns

Failure Modes

- “Convergence theater” (PR-driven partnerships without integration)

- Governance bottlenecks (legal/compliance can’t evaluate cross-domain risk fast enough)

- Talent scarcity (insufficient cross-disciplinary builders)

12–24 Month Outlook

Convergence will intensify, but the biggest near-term impact will be organizational: who can build repeatable pipelines from research to production.

What Superway Tracks

- Cross-domain patent and partnership density

- VC clustering around hybrid categories (AI+X)

- Government and defense procurement language referencing converged systems

9. VR Training Simulations for Employees

Solving the Workforce Bottleneck with Experiential Learning

What Is Actually Changing

VR training is evolving from novelty demos to operational training infrastructure, particularly where:

- Real-world training is expensive or dangerous

- Skills must be standardized quickly

- Performance needs measurement, not just completion

The key transformation: training shifts from “content delivery” to behavior rehearsal — repeatable, measurable, and safe.

Adoption Reality

VR training is most viable where ROI is visible:

- Safety and compliance (industrial, logistics, healthcare)

- High-turnover environments where training throughput matters

- Customer-facing roles where scenario practice improves outcomes

In knowledge work, adoption is slower, often blocked by hardware distribution and unclear ROI.

Market Mechanics

VR training economics are shaped by:

- Hardware cost + lifecycle management

- Content creation pipelines (a major bottleneck)

- Integration with LMS/HR systems and performance analytics

Platforms that reduce content production friction (templates, AI-assisted scenario generation, modular assets) will capture disproportionate value.

Second-Order Effects

- Measurable training data becomes a talent analytics asset

- Improves change adoption for cloud-native and security initiatives by reducing skill ramp time

- Can become a compliance artifact (audit-friendly training proof)

Winners and Losers

Winners

- Organizations with repetitive training needs and high failure costs

- Platforms integrating VR performance data into broader analytics

Losers

- Teams deploying VR without operational rollout plans

- Companies underestimating device ops and content maintenance

Failure Modes

- Content stagnation (scenarios become outdated)

- “Pilot purgatory” without integration into performance systems

- Poor UX leading to low adoption

12–24 Month Outlook

Expect gradual but steady growth, with VR training becoming a default in safety-critical training and an optional accelerator elsewhere.

What Superway Tracks

- Repeat usage (not just rollout)

- Reduction in incident rates / time-to-competency

- Content pipeline velocity and refresh cadence

10. Quantum Computing Applications in Finance

Asymmetric Optionality, Slow Ramp, High Leverage

What Is Actually Changing

Quantum computing in finance is progressing through targeted, economically motivated experiments, not broad transformation. The shift is from theoretical curiosity to use-case-specific feasibility, often via hybrid quantum-classical approaches.

Finance is a natural early domain because:

- Optimization problems are central

- Small performance improvements can yield large economic value

- Simulation and risk modeling are compute-intensive

Adoption Reality

Most institutions are in one of three modes:

- Capability building (teams, partnerships, sandbox access)

- Prototyping narrow algorithms (portfolio optimization, risk estimation)

- Monitoring and readiness (watching toolchain maturity)

Few are dependent on quantum outcomes — which is strategically correct.

Market Mechanics

Quantum finance advances when:

- Toolchains become usable by non-PhD teams

- Hybrid approaches show incremental value

- Vendor platforms reduce experimentation friction

The market is shaped by partnerships: banks + cloud providers + quantum hardware firms + universities.

Second-Order Effects

- Creates a new talent category: “quantum-aware” finance engineers

- Encourages more sophisticated simulation pipelines (often improving classical systems too)

- May become a regulatory topic if quantum advantages impact market fairness or security

Winners and Losers

Winners

- Institutions investing early in skills and experimentation discipline

- Ecosystems that make hybrid workflows practical

Losers

- Overcommitted deployments with no business case

- Firms waiting for “quantum certainty” and missing learning curves

Failure Modes

- Treating quantum as a binary breakthrough instead of a gradual capability

- Prototypes that never integrate into risk systems and decision processes

- Misalignment between research teams and business owners

12–24 Month Outlook

Expect incremental progress and increasing internal readiness programs rather than widespread production dependence.

What Superway Tracks

- Hybrid quantum-classical workflow adoption

- Talent movement and dedicated quantum finance teams

- Vendor ecosystem maturity (tooling, integration, reliability)

11. Cloud-Native Application Development

From “Cloud Migration” to Operational Speed as a Competitive Weapon

What Is Actually Changing

Cloud-native development is no longer a modernization preference — it’s becoming the default operating model for software delivery. The shift is from moving workloads to the cloud to designing systems that assume:

- Continuous delivery

- Elasticity and failure tolerance

- Infrastructure-as-code

- Service decomposition (microservices) and/or event-driven architectures

- Automated observability and security controls

The defining change isn't "containers" or "Kubernetes" by itself; it's the rise of platform engineering and internal developer platforms (IDPs) that convert cloud complexity into reusable patterns.

Adoption Reality

- Mainstream among tech-forward enterprises; still uneven in traditional sectors

- Most organizations sit in a hybrid state: cloud hosting exists, but cloud-native practices are partial (infrastructure modernized, delivery model still legacy)

- Adoption accelerates when businesses demand faster release cycles and resilience under load

Market Mechanics

Cloud-native maturity is driven by:

- Standardization on containers/Kubernetes and managed services

- Consolidation around cloud provider ecosystems

- Toolchains that reduce cognitive load (golden paths, templates, guardrails)

The biggest spend is increasingly not compute — it’s engineering time spent managing complexity. IDPs and platform teams exist because the cloud-native stack otherwise becomes an “accidental tax.”

Second-Order Effects

- Faster shipping increases security and governance pressure (manual reviews don’t scale)

- Microservices increase attack surface and observability demands

- Cloud-native accelerates AI integration because deployment pipelines and data flows become modular and repeatable

Winners and Losers

Winners

- Organizations that invest in platform engineering and standardization

- Vendors offering integrated dev + ops + security workflows

Losers

- Firms migrating to cloud but retaining monolithic delivery models

- Teams accumulating tool sprawl without shared patterns

Failure Modes

- “Kubernetes complexity trap” without strong platform abstraction

- Microservice proliferation without domain modeling discipline

- Over-automation that hides risk rather than controlling it

12–24 Month Outlook

Cloud-native will increasingly be measured by time-to-change and time-to-recover (DORA-style outcomes), not by “cloud adoption %.”

What Superway Tracks

- Growth of platform engineering teams

- IDP adoption and “golden path” usage

- Release frequency, rollback rates, and MTTR improvements (proxy signals)

12. AI-Driven Predictive Analytics in Retail

From Retrospective BI to Real-Time Operational Decisioning

What Is Actually Changing

Retail predictive analytics is shifting from dashboards to decision systems. AI is increasingly used to:

- Forecast demand and reduce stockouts

- Optimize pricing and promotions

- Improve inventory allocation across locations

- Detect fraud, returns abuse, and shrink patterns

- Personalize experiences with real-time context

The core change is that predictive analytics is moving “closer to the operational edge” of the business — into replenishment, routing, merchandising, and customer interaction loops.

Adoption Reality

- Breakout-to-mainstream depending on retailer scale

- Large retailers have multi-year programs; mid-market adoption often happens via packaged tools and platforms

- Adoption success correlates with data foundation quality (Trend 5) and execution discipline (process integration)

Market Mechanics

Retail analytics spending clusters around three levers:

- Inventory economics (carrying costs vs stockouts)

- Margin optimization (pricing/promo elasticity)

- Labor productivity (staffing and fulfillment)

Because small predictive improvements can generate outsized margin impacts, ROI can be strong — but only if models are operationalized into workflows.

Second-Order Effects

- Higher dependence on integrated data pipelines and warehouses

- Increased risk of brittle automation (models drift, promo dynamics change)

- Governance becomes a constraint: retailers need explainability when AI decisions affect pricing fairness and consumer trust

Winners and Losers

Winners

- Retailers with unified customer + inventory data and fast experimentation cycles

- Platforms that connect forecasting → decision → execution (not just insights)

Losers

- Analytics teams producing models that never make it into replenishment, pricing, or operations

- Organizations relying on personalization without privacy-ready governance

Failure Modes

- Model drift during seasonality shifts and supply shocks

- Over-personalization triggering trust backlash

- Fragmented ownership (analytics team builds; ops team ignores)

12–24 Month Outlook

The next wave is closed-loop retail AI: systems that learn from outcomes and adjust continuously, with guardrails.

What Superway Tracks

- “Decisioning” adoption (models pushing actions, not reports)

- Drift-monitoring and retraining cadence

- Integration depth with inventory and pricing systems

13. Blockchain Interoperability Protocols

From Fragmented Chains to Cross-Chain Utility

What Is Actually Changing

Blockchain interoperability is attempting to solve one fundamental barrier: fragmentation. Enterprises and ecosystems cannot rely on blockchain systems at scale if value and data cannot move reliably across networks.

Interoperability protocols aim to enable:

- Cross-chain asset transfers

- Shared messaging and state verification

- Multi-chain application architectures

- Reduced vendor/chain lock-in

The core shift is from “which chain wins?” to “how chains connect safely.”

Adoption Reality

- Nascent-to-breakout with concentrated adoption in finance-adjacent domains and digital asset infrastructure

- Enterprise adoption remains cautious due to security concerns and uncertain standards

- Most real-world implementations focus on controlled interoperability (permissioned networks, consortiums, or regulated asset flows)

Market Mechanics

Interoperability adoption depends on:

- Regulatory clarity for cross-chain assets

- Security credibility (bridges have been a major exploit vector historically)

- Standardization and ecosystem support

Economically, interoperability matters when it reduces friction in settlements, identity verification, supply chain provenance, or tokenized asset workflows.

Second-Order Effects

- Interoperability increases the blast radius of failures (cross-chain risk propagation)

- Governance complexity increases (multiple networks, multiple rule sets)

- Pushes security automation demand because manual monitoring across chains doesn’t scale

Winners and Losers

Winners

- Protocols and platforms that can demonstrate security and compliance compatibility

- Institutions positioned to orchestrate regulated cross-chain flows

Losers

- Chains dependent on isolation as a moat

- Projects built on bridges without robust security models

Failure Modes

- Bridge exploits and trust collapse

- Standards fragmentation (too many “interoperability solutions”)

- Regulatory shock (sudden compliance requirements breaking cross-chain operations)

12–24 Month Outlook

Expect gradual, regulated progress—more “interoperability in controlled lanes” than open cross-chain free-for-all.

What Superway Tracks

- Adoption of standards and audited interoperability frameworks

- Regulatory language referencing cross-chain asset flows

- Security incident frequency and severity in interoperability systems

14. Cybersecurity Automation Tools

When Human Security Operations Hit Scale Limits

What Is Actually Changing

Cybersecurity automation is shifting from productivity enhancement to operational necessity. The volume, speed, and sophistication of threats exceed human processing capacity.

Automation is expanding across:

- Detection triage and alert correlation

- Incident response playbooks (SOAR)

- Identity and access governance workflows

- Continuous compliance evidence collection

- AI-driven threat hunting and anomaly detection

The defining change is the movement toward machine-speed defense with human oversight, rather than human-first response.

Adoption Reality

- Breakout in large enterprises and regulated sectors

- Faster adoption where security staffing is constrained and compliance is heavy

- Increasingly driven by executive risk posture, not just CISO preference

Organizations are learning that adding tools increases alerts; without automation, tool sprawl creates operational collapse.

Market Mechanics

Cyber automation value is driven by:

- Reduced time-to-detect and time-to-respond

- Reduced analyst workload

- Improved audit readiness and reporting

Automation platforms that integrate broadly (identity, endpoint, cloud, SIEM) outperform point tools because they can coordinate response across surfaces.

Second-Order Effects

- Automation becomes a governance issue: who is allowed to auto-remediate?

- Attackers adapt, increasing adversarial pressure on detection models

- Automation makes security posture more measurable, affecting procurement and insurance

Winners and Losers

Winners

- Organizations with strong identity foundations (Zero Trust alignment)

- Platforms that reduce alert volume via correlation, not just more detection

Losers

- Firms stacking tools without orchestration

- Teams that automate blindly and trigger outages or access disruptions

Failure Modes

- Over-automation causing business disruption (false positives with heavy impact)

- Incomplete integration leading to partial response and brittle workflows

- “Automation theater” — buying SOAR but not operationalizing playbooks

12–24 Month Outlook

Cybersecurity will increasingly resemble SRE: continuous monitoring, automated remediation, measurable reliability outcomes.

What Superway Tracks

- Adoption of auto-remediation policies

- Integration density across security stack

- Mean-time-to-detect/respond improvements and outage incidents caused by security automation

15. Edge Computing for IoT Devices

Distributed Intelligence as a Response to Latency, Cost, and Resilience Constraints

What Is Actually Changing

Edge computing is evolving from “processing outside the cloud” into distributed decision architecture. As IoT deployments scale, sending all data to centralized cloud systems becomes:

- Too slow (latency-sensitive use cases)

- Too expensive (bandwidth and storage)

- Too risky (connectivity failures, data exposure)

Edge systems increasingly run:

- Real-time analytics

- Local ML inference

- Event filtering and aggregation

- Privacy-preserving processing

Adoption Reality

- Breakout in industrial and infrastructure environments (manufacturing, energy, logistics)

- Emerging in retail and smart environments where real-time response matters

- Often deployed in hybrid models: edge for immediate action, cloud for aggregation and learning

Adoption increases where operations cannot tolerate downtime, and where on-prem constraints are unavoidable.

Market Mechanics

Edge economics are shaped by:

- Hardware lifecycle and management overhead

- Connectivity reliability and cost

- The need for secure, maintainable software delivery at the edge (cloud-native meets physical deployment)

This creates demand for platforms that can orchestrate edge fleets similarly to cloud workloads.

Second-Order Effects

- Edge accelerates cybersecurity complexity: more nodes, more endpoints

- Edge can reduce AI energy load by shifting some inference away from centralized data centers (Trend 4 interaction)

- Drives new governance questions: data residency and local processing standards

Winners and Losers

Winners

- Industries requiring real-time control and high resilience

- Platforms that make edge deployment observable, secure, and updatable at scale

Losers

- Deployments without fleet management and patching discipline

- Organizations that treat edge as “set and forget hardware” (it becomes a liability)

Failure Modes

- Security gaps due to inconsistent patching and identity

- Fragmentation across device types and runtime environments

- Edge deployments that become unmaintainable “shadow IT”

12–24 Month Outlook

Edge will expand as AI moves into real-time operations, but successful deployments will depend on cloud-native discipline applied to physical systems.

What Superway Tracks

- Edge fleet orchestration adoption

- Update/patch cadence at the edge

- Edge inference growth vs cloud inference growth (a proxy for architecture shift)

Conclusion

Digital transformation is no longer defined by ambition or access to technology. It is defined by constraint management—by how effectively organizations coordinate energy, infrastructure, data, security, governance, and human capacity inside increasingly interdependent systems. The fifteen trends tracked in this report do not point toward a single dominant architecture or winning platform. Instead, they reveal a landscape where advantage accrues to those who understand where scale breaks, where consolidation helps, where openness is necessary, and where restraint is strategic. In this environment, transformation is less about adopting the newest tool and more about recognizing which pressures are structural and which are temporary. Superway’s role is to surface those pressures early—before they harden into limits—so leaders can act with clarity rather than urgency.